What is latency anyway?

The terms "latency", "low-latency", "ultra-low latency", "zero latency" or even "no latency" are used by many vendors providing video streaming solutions. However, without clear numbers, it is difficult to measure these statements.

"Latency refers to time interval or delay when a system component is waiting for another system component to do something. This duration of time is called latency."

Technopedia.com

According to a study from the MIT*, the human eye starts to perceive latency from around 13 milliseconds - all of intoPIX's technologies meet this requirement.

But let’s skip the pure perception and get down to the numbers. When we speak about the TICO SMPTE RDD35 lightweight low latency technology, we speak about MICROSECONDS of latency. But how much, or rather how fast, is that actually?

Here the basics:

How can we calculate the final latency?

Since TICO is a line-based compression technology, let’s first correlate latency in frames/lines with latency in (milli-) seconds for a video stream running at 60 frames per second:

Latency in ...

... seconds ... frames

________________________________

1 second = 60 frames

333 milliseconds = 20 frames

50 milliseconds = 3 frames

16,66 milliseconds = 1 frame

8,44 milliseconds = 0,5 frames

TICO technology can reach a latency of as low as 6.5 lines of pixels at the encoder and 6 lines of pixels at the decoder – compiling into a total latency of just 12,5 lines.** If we now consider a UHDTV 4K video stream, with each frame having a vertical size of 2160 lines, it practically means the following:

12,5 lines / 2160 lines x 16,66 = 0,0964 milliseconds

__

TICO 4K60P real-time encoding + decoding only takes 96 MICROSECONDS.

**TICO profile 1 when implemented on a chip (FPGA/ASIC).

So, could we say "zero"?

It is less than 1 frame, so is it "zero frame latency"? It is less than a millisecond, so is it "zero millisecond latency"? It all depends on your perspective. What is clear however, is that at this level, latency is not a concern anymore. It is so low, that you cannot notice it with the bare eye and cannot capture it in any application.

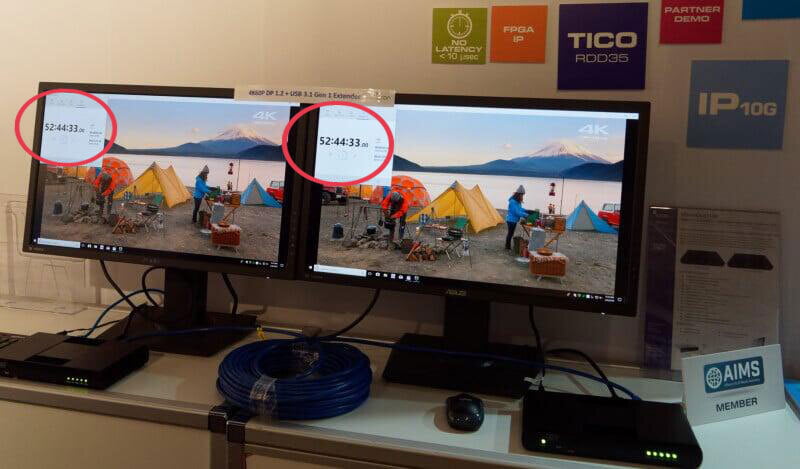

When taking a picture of both, the source and the destination, going through our encoder and decoder, you will not capture any latency. Even when you display time code information!

Thus, can we say "zero"? We believe we can!

Not yet convinced? Request an evaluation.

Related content

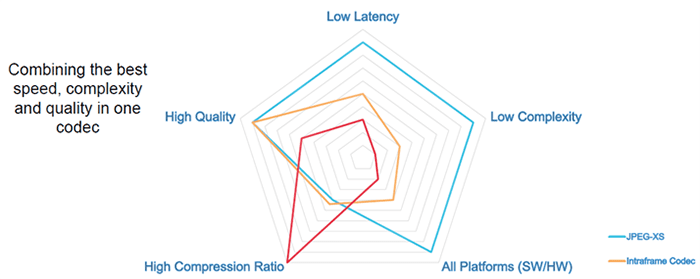

What makes JPEG XS Different from Other Codecs ?

JPEG XS is the successor of TICO. Learn about JPEG XS, the new ISO standard for lightweight low-latency video coding, co-created by the intoPIX engineering team.

AR, VR, MR. intoPIX Solutions to Make XR Better

Learn about intoPIX solutions enabling zero lag, zero latency, high-performance 8K transmission of video from the source to the head-mounted display.