SMPTE 2110 Professional Media over IP Infrastructure

with added -22 for JPEG XS compressed video essence

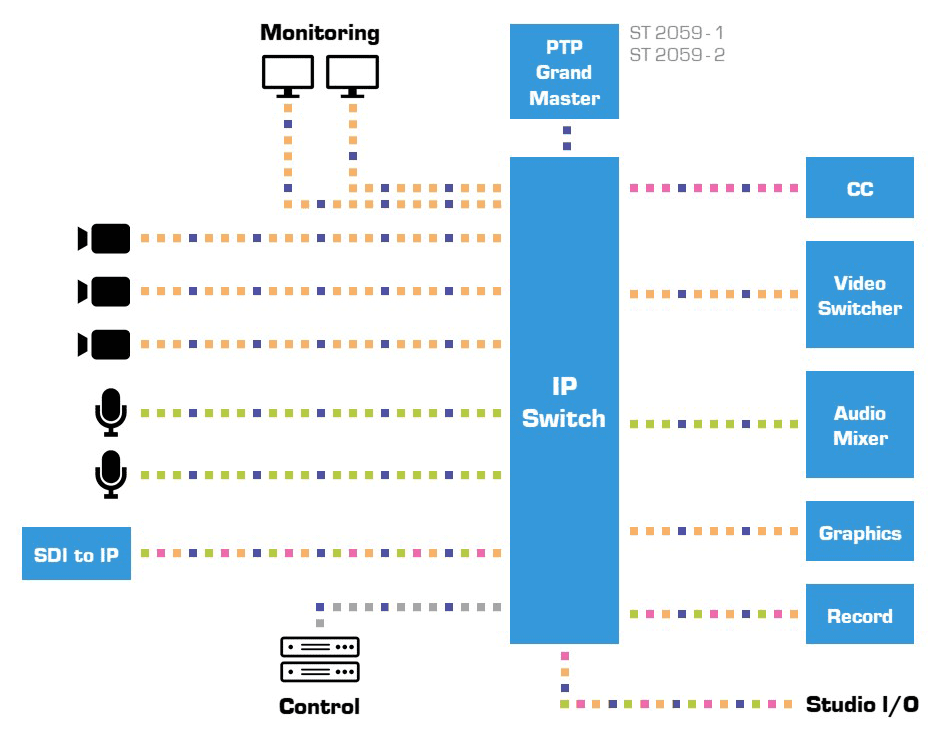

The SMPTE ST 2110 standards for professional media over IP infrastructures serves as a major contributor to the industry’s movement toward IP-based infrastructures.

The suite of standards specifies the carriage, synchronization, and description of separated video, audio and ancillary data streams over IP for live production, playout, and other professional media applications.

By adding timestamps, all elements can be routed separately and brought together at any endpoint. This synchronized separation of streams, as opposed to SMPTE ST 2022, simplifies the process of adding metadata such as captions, subtitles, Teletext, time codes, and simplified video editing, as well as tasks such as the processing of multiple audio languages and types.

Today, the standard suite is embraced by the industry and many are offering equipment and solutions based on the SMPTE ST 2110. To look at vendors offering ST2110 products, check out the members of the AIMS Alliance for example or the JPEG XS in Action page, highlighting several vendors

Discover all parts of the suite, and how compressed video gets included.

Core System-Level

It Defines the system architecture, synchronization using PTP and stream relationships. The SMPTE ST 2059 (IEEE 1588-2008 / PTP) is used to distribute time and timebase to each device within the system by giving timestamps to the separate streams. It specifies the various system clocks and how the RTP timestamps are calculated for Video, Audio and ANC signals. This enables each component flow — audio, video, metadata —to be synchronized to each other, while remaining independent streams.

Part 21 defines a timing model for SMPTE 2110-10 video RTP streams as measured leaving the RTP sender, and defines the sender SDP parameters used to signal the timing properties of such streams (e.g. narrow linear sender, wide linear sender). It ensures smooth video delivery a

Essence Transport Standards

This standard specifies the real-time, RTP-based transport of uncompressed active video essence over IP networks. An SDP-based signalling method is defined for image technical metadata necessary to receive and interpret the stream. It supports resolutions up to 32K x 32K pixels, thus well covering the currently trending UHD formats, Y’Cb’Cr’, RGB, XYZ and I’Ct’Cp’ color spaces, HDR and HFR content, 4:2:2/10, 4:2:2/12, 4:4:4/16, and more.

With the Part 22, SMPTE 2110 specifically defines the standardized way for transporting compressed video over IP workflows using in most cases - the JPEG XS lightweight low-latency lossless-quality compression (see TicoXS ). The IETF RTP payload of JPEG XS is fully defined and Video Services Forum (VSF) has made available a Technical recommendation - VSF TR08 - for SMPTE 2110-22 (more info here) .

The use of compressed video to ST 2110 intensifies the existing advantages of moving to IP based workflows – flexibility, scalability, unlimited accessibility – by allowing users to transport generally high-bandwidth videos like HD,4K and 8K over cost-effective COTS 1GbE/10GbE networks. It is also useful for constrained bandwidth (WAN, UHD4K or 8K, 1/2.5GbE computer interfacing,...)

intoPIX TicoXS and TicoXS FIP ultra-low latency & lossless quality codecs position compression as a solid sustainable solution for creating cost-effective, bandwidth-efficient and high quality live production workflows into the LAN, over to WAN or to the cloud with JPEG XS capabilities. In no means, it is inferior to uncompressed video concerning neither quality nor latency. It is just better in bandwidth as it enables to (re)use COTS equipment, existing cables and networks such as 1GbE, 10GbE to manage multiple streams in HD, 4K and 8K with a more affordable bandwidth. Note that that ST 2110-22 JPEG XS is also being used into the IPMX standard.

Examples of Typical Bandwidth Ranges

SMPTE 2110-22 JPEG XS commonly uses compression ratios between 6:1 to 15:1, but this can vary up to 20:1 depending on the JPEG XS profile (High or TDC) and selected resolution (e.g 1080p, 4K, 8K) , Frame Rate (e.g. 25, 50, 60 fps), Color Sampling (e.g. 8-bit, 10-bit, 12-bit).

| Video Format | SMPTE ST 2110-20 Uncompressed 4:2:2 Bandwidth | SMPTE ST 2110-22 JPEG XS (6:1) | SMPTE ST 2110-22 JPEG XS (10:1) | SMPTE ST 2110-22 JPEG XS (15:1) |

|---|---|---|---|---|

| 1080p60, 4:2:2, 10-bit | ~2.4 Gbps | ~400 Mbps | ~240 Mbps | ~160 Mbps |

| 4Kp60, 4:2:2, 10-bit | ~9.6 Gbps | ~1.6 Gbps | ~960 Mbps | ~640 Mbps |

| 8Kp60, 4:2:2, 10-bit | ~38.4 Gbps | ~6.4 Gbps | ~3.8 Gbps | ~2.56 Gbps |

ST 2110-30 deals only with the real-time, RTP-based transport of PCM digital audio streams over IP networks. An SDP-based signaling method is defined for metadata necessary to received and interpret the stream. Non-PCM digital audio signals, which includes compressed audio, are beyond the scope of this standard. It uses AES67 as the transport layer and it ensures cross-vendor compatibility in audio networks.

Part 31 can handle non-PCM audio. In this part, the real-time, RTP-based transport of AES3 formatted audio signals over IP networks, referenced to a network reference clock, is specified. (e.g. Dolby E, non-PCM formats)

ST 2110-40 basically says how to use the IETF RFC 8331 with 2110, for generically wrapping ancillary data items in IP packets. It specifies the transport of SMPTE ST 291-1 Ancillary (ANC) data packets related to digital video streams over IP networks. In this way, it enables break-away routing of Audio and VANC. (e.g., closed captions, SCTE-104, timecode)

Supporting Infrastructure Standards, Peripheral Standards and Protocols

These are not part of 2110 directly but are recommended or required for implementing 2110-compliant system:

- IS-04 Discovery and Registration of IP-based media devices and services.

- IS-05 Connection Management between senders and receivers (routing flows).

- IS-06 Network Control — interaction with SDN controllers.

- IS-07 Event and Tally messages (aligned with ST 2110-41).

- IS-08 Audio Channel Mapping configuration (useful with ST 2110-30).

- BCP-003-01/02 Security best practices (e.g., access control, secure transport).

About SMPTE:

For more than a century, the people of the Society of Motion Picture and Television Engineers® (SMPTE®, pronounced “simp-tee”) have sorted out the details of many significant advances in media and entertainment technology, from the introduction of “talkies” and color television to HD and UHD (4K, 8K) TV. Since its founding in 1916, the Society has received an Oscar® and multiple Emmy® Awards for its work in advancing moving-imagery engineering across the industry. SMPTE has developed thousands of standards, recommended practices, and engineering guidelines, more than 800 of which are in force today.

For more information visit: https://www.smpte.org

Adding the JPEG XS mezzanine compression on ST 2110-22 - intoPIX Solutions for Developers & OEMs

intoPIX has released encoder and decoder IP-cores for FPGA , as well as , accelerated SDK for OEM and developers running on Nvidia GPUs & x86-64 Intel or AMD CPUs :

- TicoXS IP Cores - with a full range delivering 8, 10, 12 bit capability, 4:2:2 and 4:4:4 color sampling, HD, 4K, 8K at up to 60fps or 120fps. Thanks to their extremely small footprint, TicoXS encoder and decoder IP-cores fit onto the smallest Altera, AMD Xilinx or Lattice FPGAs, requiring no additional memory and enabling a firmware upgrade of existing FPGA-based systems. See also our companion IPX-RTP-XS IP-cores for 2110-22.

- Fast TicoXS SDKs - that encode and decode HD, 4K or 8K on Intel and AMD x86-64 or ARM 64 CPU processors and on Nvidia GPU.

- Titanium SDK & SoC EDK: intoPIX also provide ST2110 solutions for developers as SDK and Embedded Development Kit for FPGA SoC, Jetson SoC or other ARM based SoC.

UPDATE: intoPIX has also released a SMPTE 2110-22 Wireshark Dissector available here to parse and verify your SMPTE 2110-22 streams.

Testing the world first SMPTE 2110 Software Applications - intoPIX Solutions for Professionals

intoPIX has released the Titanium Software Suite for Professionals to enable anyone to runs SMPTE 2110 (or IPMX) on standard computer with Gigabit Networks

- TitaniumViewer is a software application that transforms standard laptops or workstations into efficient, low-latency receivers for SMPTE ST 2110 and IPMX video and audio streams—supporting resolutions up to 8K—ideal for professional AV monitoring, multi-viewing, and real-time production workflows. It fully integrates NMOS discovery , registration and control capabilities.

- TitaniumShow is a software application that enables laptops and workstations to act as SMPTE ST 2110 and IPMX video sources, allowing seamless, ultra-low-latency casting of native or virtual screens—up to 8K resolution—over standard 1G or 2.5G Ethernet networks using JPEG XS compression.

UPDATE: intoPIX has also released a SMPTE 2110-22 Wireshark Dissector available here to parse and verify your SMPTE 2110-22 streams.

Related content

intoPIX provides a full range of JPEG XS encoders & decoders as FPGA IP-cores supporting HD, 4K and even 8K, 422, 444 & HDR formats, with unique features and an extra small footprint. Discover the TicoXS and TicoXS FIP families of JPEG XS cores.

intoPIX provides a full range of JPEG XS encoders & decoders as FPGA IP-cores supporting HD, 4K and even 8K, 422, 444 & HDR formats, with unique features and an extra small footprint. Discover the TicoXS and TicoXS FIP families of JPEG XS cores.